Site Contents

Related News

Last updated: 2026-02-05 06:03

-

AI security worries stall enterprise production deployments | TechTarget

2026-02-05 05:20 www.techtarget.com -

Nvidia AI chip sales to China stalled by US security review, FT reports | Reuters

2026-02-05 04:44 www.reuters.com -

Heartland sheriff's office warns of privacy, security risks with AI-generated photos - KFVS12

2026-02-05 03:55 www.kfvs12.com -

AI and Regulation Redefine Application Security, New Global Study Finds

2026-02-05 03:16 www.itsecurityguru.org -

AI is sharing security evasion tips on forum run by agents - SDxCentral

2026-02-05 03:15 www.sdxcentral.com -

Autonomous attacks ushered cybercrime into AI era in 2025 - Cybersecurity Dive

2026-02-05 03:06 www.cybersecuritydive.com -

Varonis acquires AI TRiSM - Global Security Mag Online

2026-02-05 03:05 www.globalsecuritymag.fr -

Detecting backdoored language models at scale | Microsoft Security Blog

2026-02-05 02:53 www.microsoft.com -

OpenClaw's an AI Sensation, But Its Security a Work in Progress - Bloomberg

2026-02-05 02:37 www.bloomberg.com -

Cyberhaven unveils unified AI-driven data security platform - IT Brief UK

2026-02-05 01:30 itbrief.co.uk -

Over Three-Quarters of Security Professionals Concerned About AI Agent Risk, New ...

2026-02-03 19:05 uk.finance.yahoo.com -

AI Rapidly Rendering Cyber Defenses Obsolete: Report - TechNewsWorld

2026-02-03 19:02 www.technewsworld.com -

Meta Officially Ties Employee Performance to AI Usage; Microsoft On OpenClaw Security Risks

2026-02-03 18:40 www.theinformation.com -

Moltbook “Reddit of Robots” — Agentic AI Civilization Formation, Security Externalities and a ...

2026-02-01 19:40 debuglies.com -

Three Ways to Prepare Cybersecurity Teams to Navigate AI - GovTech

2026-02-01 19:34 www.govtech.com -

Security Bite: X going open-source is bad news for anonymous alt accounts - 9to5Mac

2026-02-01 18:10 9to5mac.com -

Palo Alto Networks Ties Chronosphere Deal To AI Security Platform Push - Simply Wall St

2026-01-31 20:13 simplywall.st -

Palo Alto Networks Targets AI Security Edge With Chronosphere Observability Deal

2026-01-31 19:34 sg.finance.yahoo.com -

AI agent Moltbot is a breakthrough and security nightmare with its own social network

2026-01-31 17:56 fortune.com -

The AI Code Generation Governance Gap Is a Security Gap - Solutions Review

2026-01-30 20:21 solutionsreview.com

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

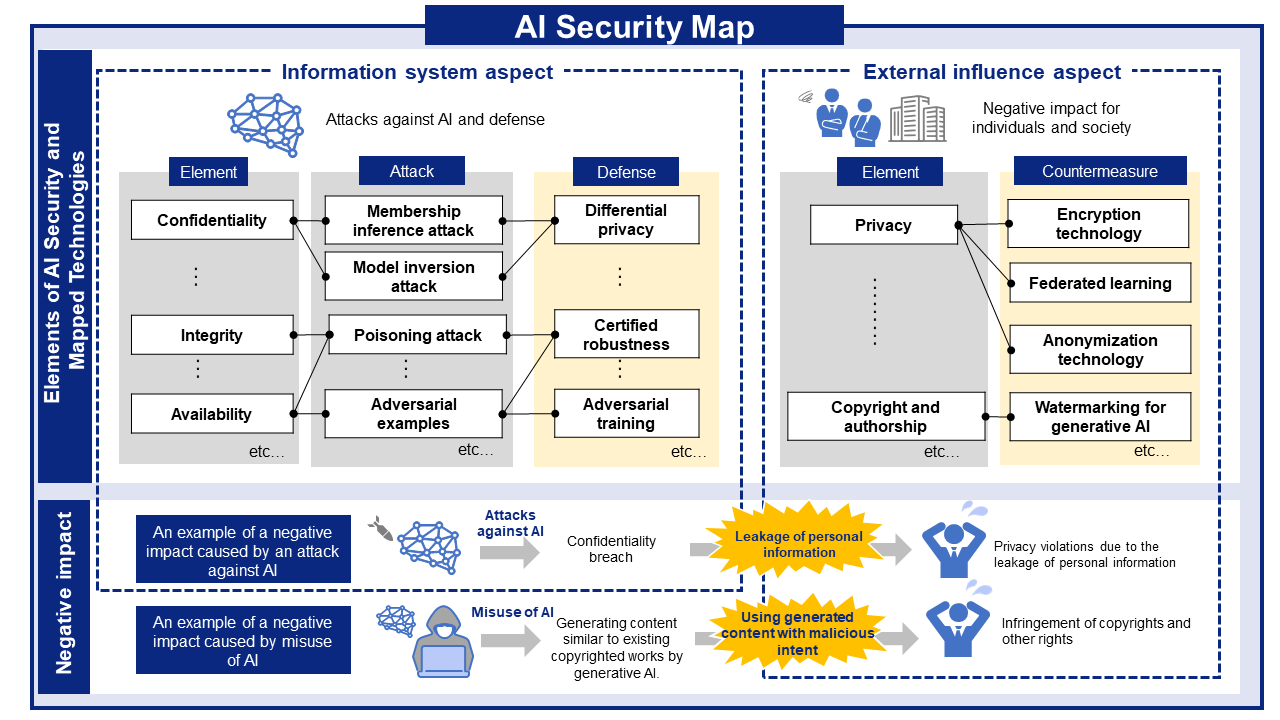

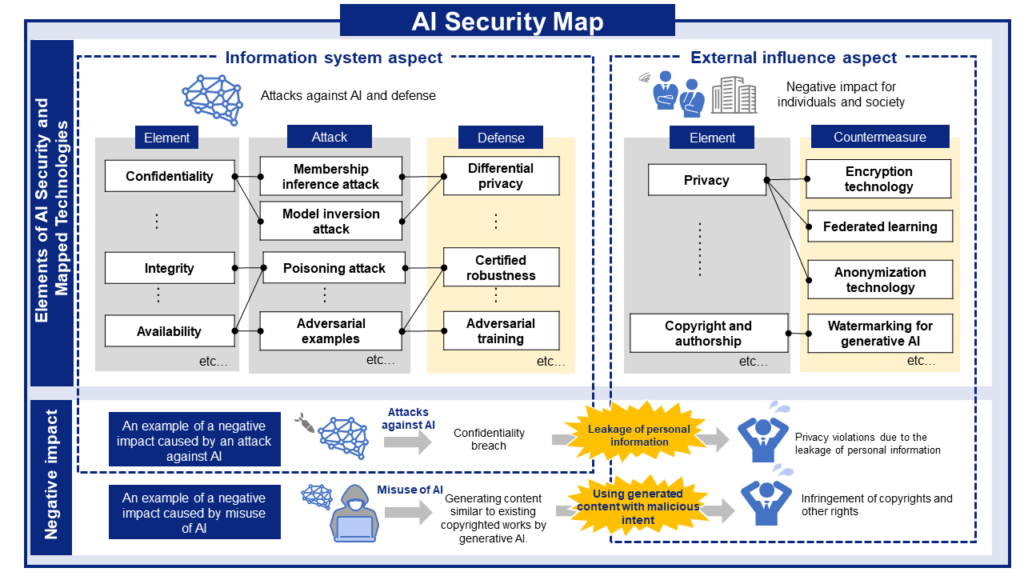

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

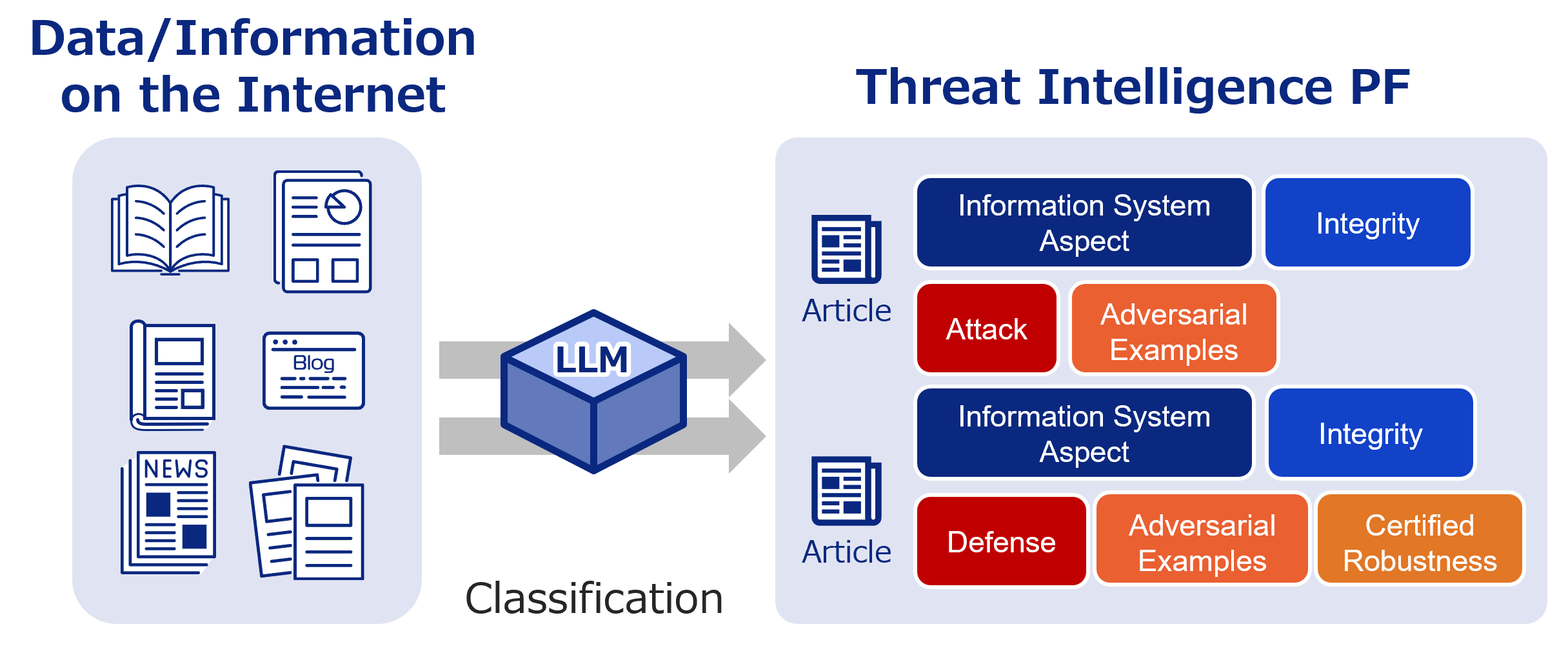

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

Don’t believe everything you read: Understanding and Measuring MCP Behavior under Misleading Tool Descriptions

Authors: Zhihao Li, Boyang Ma, Xuelong Dai, Minghui Xu, Yue Zhang, Biwei Yan, Kun Li | Published: 2026-02-03

2026.02.032026.02.05

Detecting and Explaining Malware Family Evolution Using Rule-Based Drift Analysis

Authors: Olha Jurečková, Martin Jureček | Published: 2026-02-03

2026.02.032026.02.05

LogicScan: An LLM-driven Framework for Detecting Business Logic Vulnerabilities in Smart Contracts

Authors: Jiaqi Gao, Zijian Zhang, Yuqiang Sun, Ye Liu, Chengwei Liu, Han Liu, Yi Li, Yang Liu | Published: 2026-02-03

2026.02.032026.02.05

-scaled.png)