Site Contents

Related News

Last updated: 2026-02-04 06:01

-

Over Three-Quarters of Security Professionals Concerned About AI Agent Risk, New ...

2026-02-04 04:05 uk.finance.yahoo.com -

AI Rapidly Rendering Cyber Defenses Obsolete: Report - TechNewsWorld

2026-02-04 04:02 www.technewsworld.com -

Meta Officially Ties Employee Performance to AI Usage; Microsoft On OpenClaw Security Risks

2026-02-04 03:40 www.theinformation.com -

Moltbook “Reddit of Robots” — Agentic AI Civilization Formation, Security Externalities and a ...

2026-02-01 19:40 debuglies.com -

Three Ways to Prepare Cybersecurity Teams to Navigate AI - GovTech

2026-02-01 19:34 www.govtech.com -

Security Bite: X going open-source is bad news for anonymous alt accounts - 9to5Mac

2026-02-01 18:10 9to5mac.com -

Palo Alto Networks Ties Chronosphere Deal To AI Security Platform Push - Simply Wall St

2026-01-31 20:13 simplywall.st -

Palo Alto Networks Targets AI Security Edge With Chronosphere Observability Deal

2026-01-31 19:34 sg.finance.yahoo.com -

AI agent Moltbot is a breakthrough and security nightmare with its own social network

2026-01-31 17:56 fortune.com -

The AI Code Generation Governance Gap Is a Security Gap - Solutions Review

2026-01-30 20:21 solutionsreview.com -

Silicon Valley's Favorite New AI Agent Has Serious Security Flaws - 404 Media

2026-01-30 19:48 www.404media.co -

Moltbot's rapid rise poses early AI security test - Axios

2026-01-29 20:19 www.axios.com -

The AI agent craze is molting into a security nightmare - Tech Brew

2026-01-29 19:19 www.techbrew.com -

Cybanetix achieves record increase in recurring MDR revenues as AI security operations ...

2026-01-29 19:18 newsbywire.com -

Foundation-sec-8B-Reasoning: The First Open-weight Security Reasoning Model

2026-01-29 19:03 blogs.cisco.com -

Symmetry Systems Cements Leadership in Modern Data+AI Security for Enterprise On ...

2026-01-28 20:23 sg.finance.yahoo.com -

Claroty Gets $150M to Lead in AI for Infrastructure Security - GovInfoSecurity

2026-01-26 20:22 www.govinfosecurity.com -

Start 2026 with Confidence: Oracle AI Database 26ai Reaches New Security Milestones

2026-01-26 18:37 blogs.oracle.com -

Upwind raises $250m series B to scale runtime-first cloud security - FinTech Global

2026-01-26 16:29 fintech.global -

10 Key AI Security Controls For 2026 - CRN

2026-01-26 16:11 www.crn.com

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

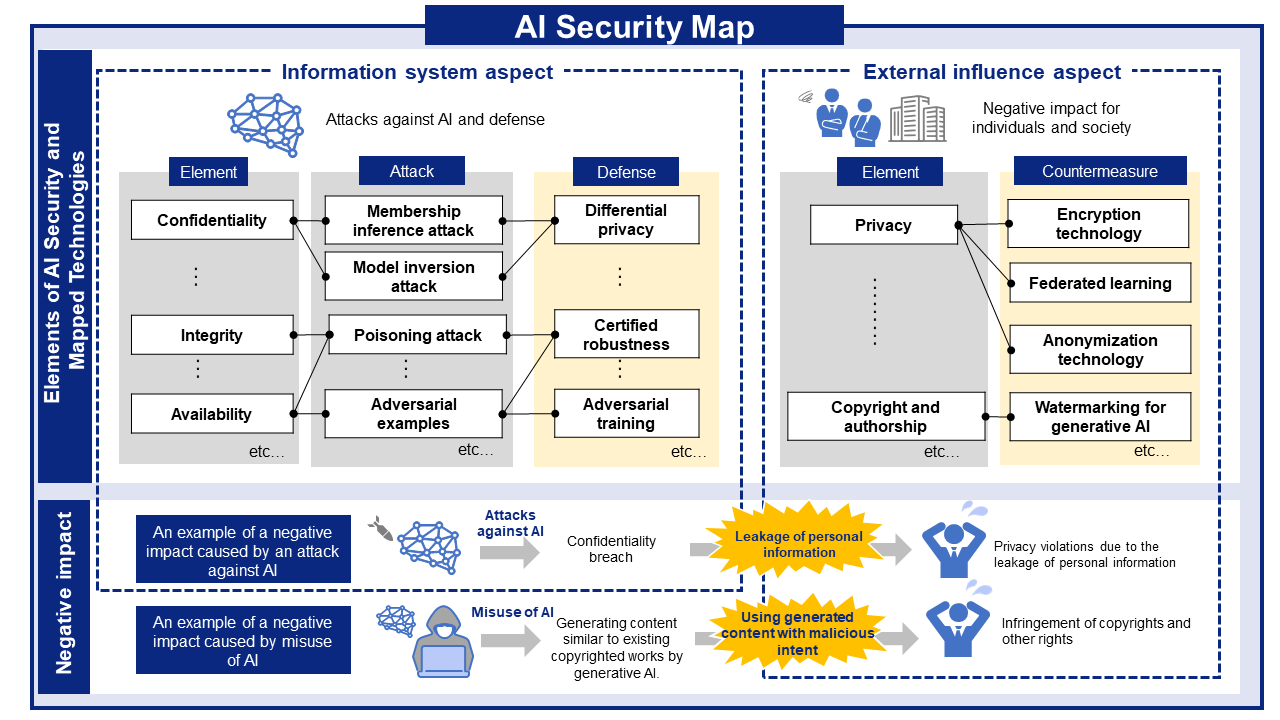

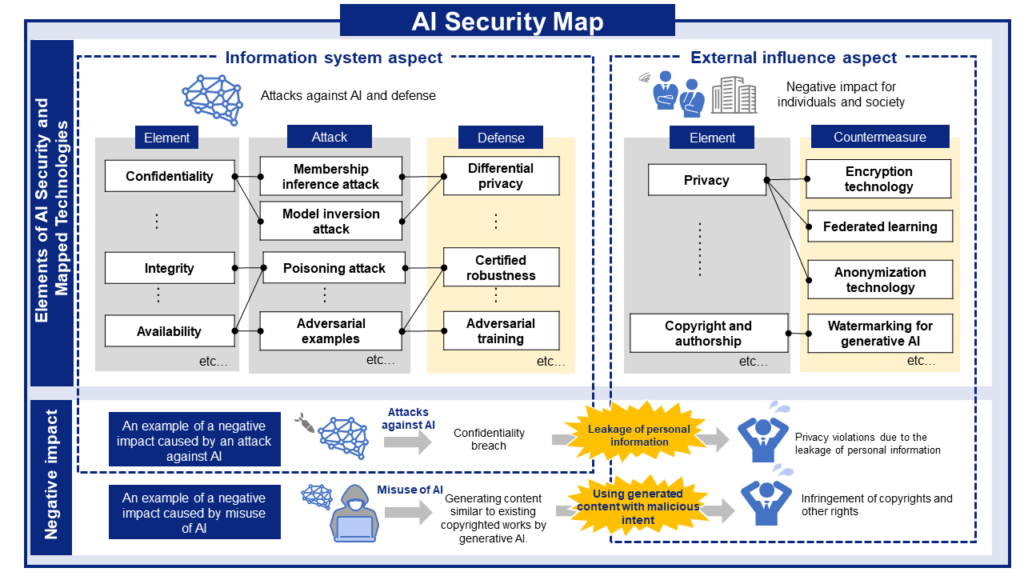

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

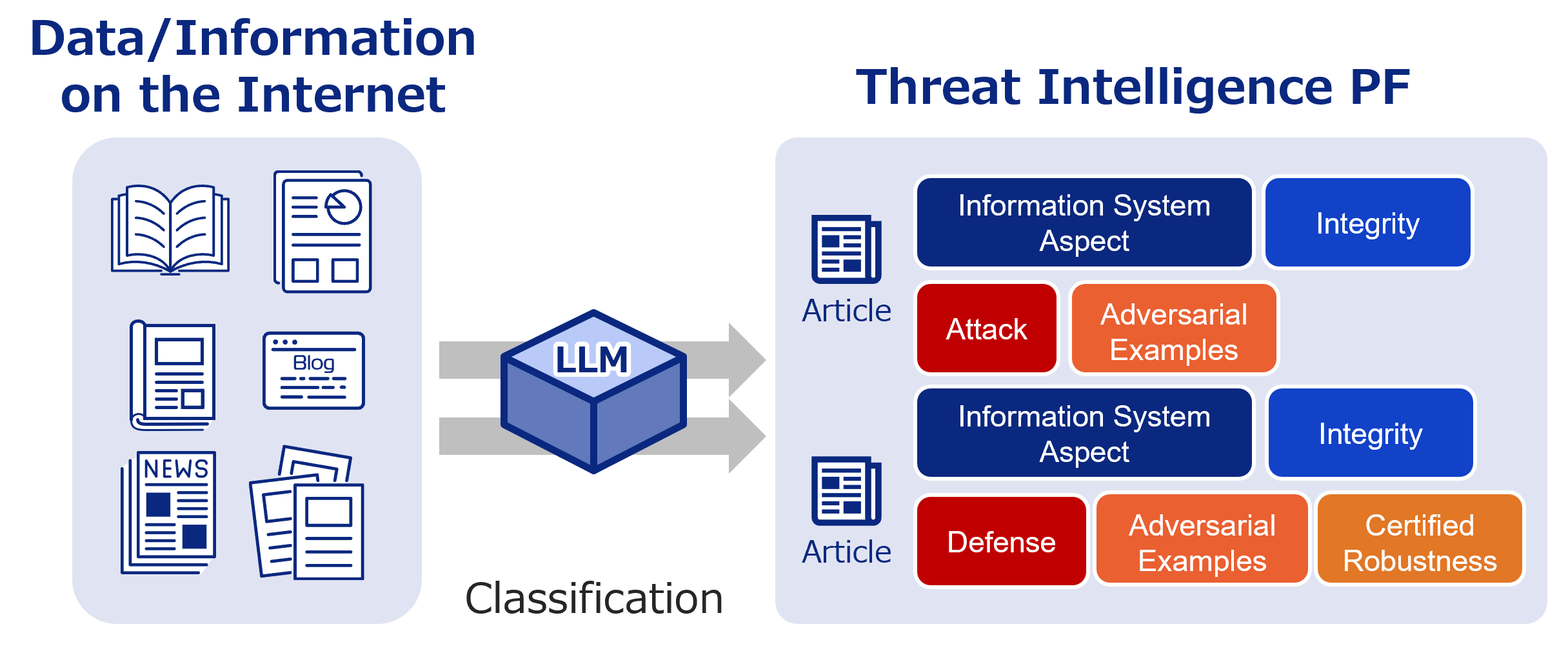

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

David vs. Goliath: Verifiable Agent-to-Agent Jailbreaking via Reinforcement Learning

Authors: Samuel Nellessen, Tal Kachman | Published: 2026-02-02

2026.02.022026.02.04

Guaranteeing Privacy in Hybrid Quantum Learning through Theoretical Mechanisms

Authors: Hoang M. Ngo, Tre’ R. Jeter, Incheol Shin, Wanli Xing, Tamer Kahveci, My T. Thai | Published: 2026-02-02

2026.02.022026.02.04

Malware Detection Through Memory Analysis

Authors: Sarah Nassar | Published: 2026-02-02

2026.02.022026.02.04

-scaled.png)