Site Contents

Related News

Last updated: 2026-03-01 06:01

-

7AI Agentic AI SOC Integrates with Extended Plan for AWS Security Hub - Yahoo Finance

2026-02-26 20:33 finance.yahoo.com -

Key facts: Intel sees rise in put options; partners with Arqit for AI security - TradingView

2026-02-26 20:15 www.tradingview.com -

Threat modeling AI applications | Microsoft Security Blog

2026-02-26 18:51 www.microsoft.com -

AI Security Concerns Create Market Opening in Agentic Access Governance - TipRanks

2026-02-26 18:46 www.tipranks.com -

AI Rattles Cybersecurity Markets: What Anthropic's Code Security Actually Does - Forbes

2026-02-24 19:38 www.forbes.com -

AI-Powered Threats vs. AI-Powered Defenses: Winning the Cybersecurity Arms Race in 2026

2026-02-24 19:36 www.msspalert.com -

Trust and transparency critical in cloud AI security - SC Media

2026-02-24 19:24 www.scworld.com -

Center for Critical Infrastructure Security Awarded Maryland Cyber & AI Clinic Grant

2026-02-24 19:20 www.hstoday.us -

Meta Security Researcher's AI Agent Accidentally Deleted Her Emails - PCMag UK

2026-02-24 17:46 uk.pcmag.com -

AI Security in Azure with Microsoft Defender for Cloud: Learn the How, Join the Session

2026-02-24 17:23 techcommunity.microsoft.com -

Cisco Live EMEA Recap: Turning AI Security into Partner Growth

2026-02-24 16:18 blogs.cisco.com -

Harness launches Artifact Registry with AI security controls - Techzine Global

2026-02-24 16:08 www.techzine.eu -

The Cyber-Resilient CISO Virtual Summit 2026 - Infosecurity Magazine

2026-02-24 15:54 www.infosecurity-magazine.com -

Microsoft Security Dashboard Strengthens Control Over Expanding AI Ecosystems

2026-02-24 15:32 cloudwars.com -

Zscaler Stock Scales Zero Trust And AI Agent Security (NASDAQ:ZS) | Seeking Alpha

2026-02-24 15:25 seekingalpha.com -

Identity-First AI Security: Why CISOs Must Add Intent to the Equation - Bleeping Computer

2026-02-24 15:13 www.bleepingcomputer.com -

Cybersecurity stock selling deepens on AI threat concerns. Why we're not bailing - CNBC

2026-02-23 20:21 www.cnbc.com -

Cybersecurity Stocks Crater on AI Fears - But Hold On | The Tech Buzz

2026-02-23 20:17 www.techbuzz.ai -

Anthropic's AI Bug Hunter Jolts Cyber Stocks - GovInfoSecurity

2026-02-22 17:46 www.govinfosecurity.com -

Cybersecurity stocks drop after Anthropic debuts Claude Code Security - SiliconANGLE

2026-02-21 20:06 siliconangle.com

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

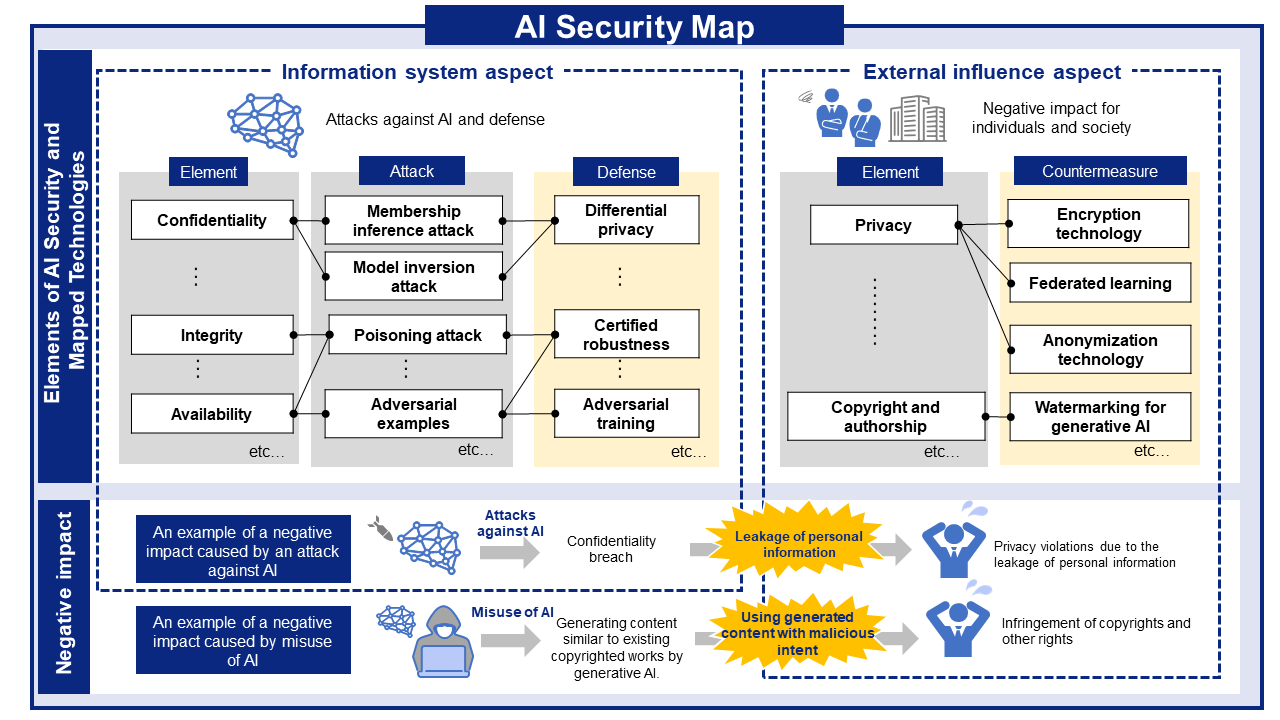

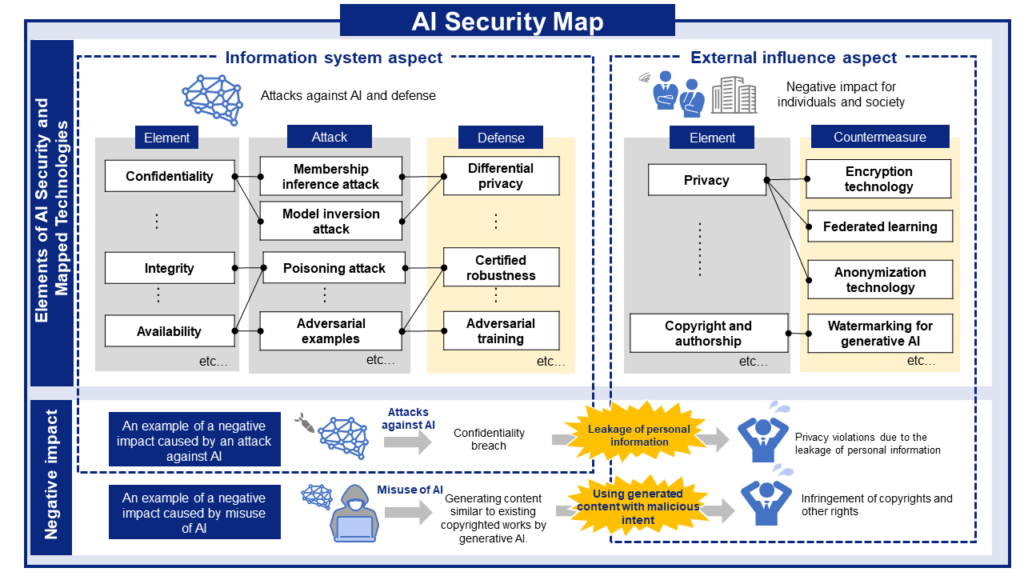

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

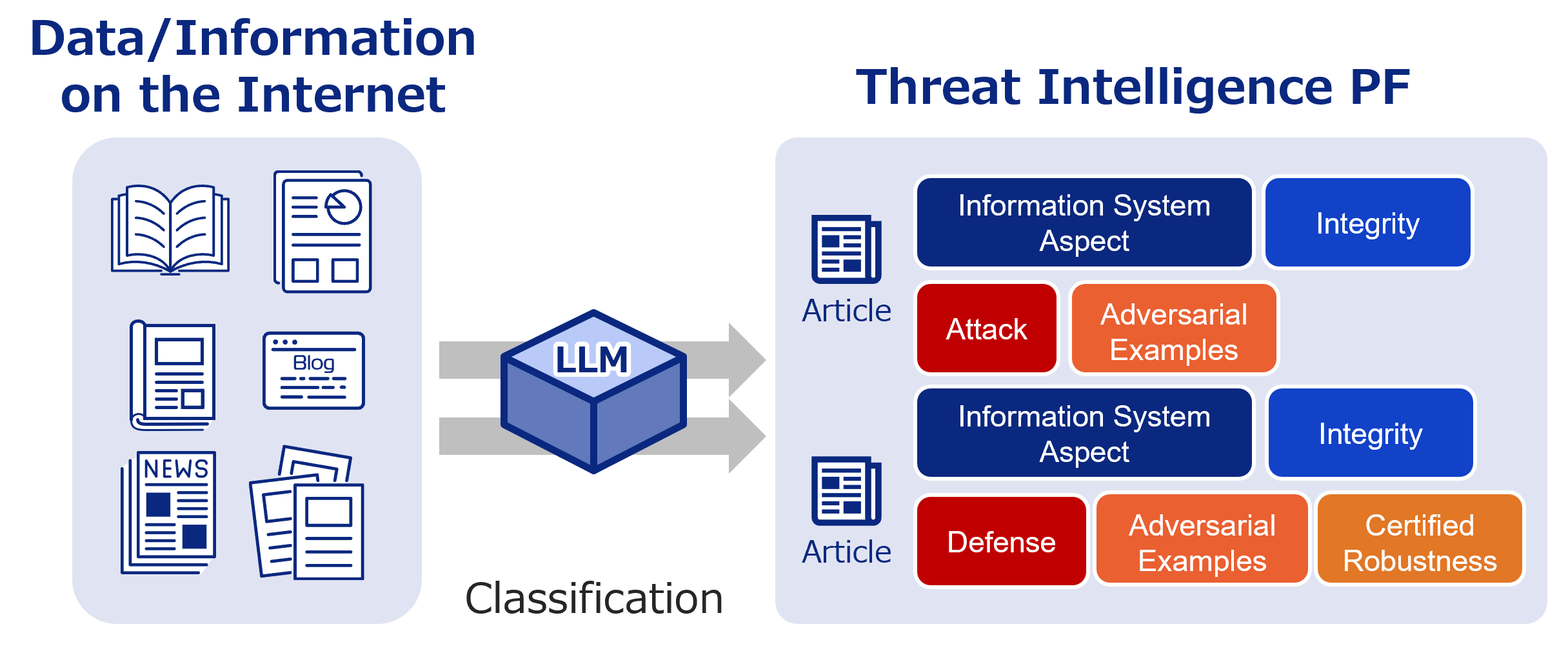

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

LLM Novice Uplift on Dual-Use, In Silico Biology Tasks

Authors: Chen Bo Calvin Zhang, Christina Q. Knight, Nicholas Kruus, Jason Hausenloy, Pedro Medeiros, Nathaniel Li, Aiden Kim, Yury Orlovskiy, Coleman Breen, Bryce Cai, Jasper Götting, Andrew Bo Liu, Samira Nedungadi, Paula Rodriguez, Yannis Yiming He, Mohamed Shaaban, Zifan Wang, Seth Donoughe, Julian Michael | Published: 2026-02-26

2026.02.262026.02.28

A Decision-Theoretic Formalisation of Steganography With Applications to LLM Monitoring

Authors: Usman Anwar, Julianna Piskorz, David D. Baek, David Africa, Jim Weatherall, Max Tegmark, Christian Schroeder de Witt, Mihaela van der Schaar, David Krueger | Published: 2026-02-26

2026.02.262026.02.28

Assessing Deanonymization Risks with Stylometry-Assisted LLM Agent

Authors: Boyang Zhang, Yang Zhang | Published: 2026-02-26

2026.02.262026.02.28

-scaled.png)