Site Contents

Related News

Last updated: 2026-01-13 06:01

-

Investors Shift Focus to AI Infrastructure, Security and Commerce | PYMNTS.com

2026-01-13 04:52 www.pymnts.com -

The 20 Coolest Cloud Security Companies Of The 2026 Cloud 100 - CRN

2026-01-13 04:49 www.crn.com -

AI Adoption Leaves Cloud Security Struggling To Keep Up, Report Finds - BusinessToday

2026-01-10 19:50 www.businesstoday.com.my -

Cloudastructure launches AI-powered security enclosure - Yahoo Finance

2026-01-10 14:42 finance.yahoo.com -

Easton Valley School Dist. implements gun detection solution software - KWQC

2026-01-10 14:18 www.kwqc.com -

Cloudastructure launches AI-powered security enclosure - TipRanks.com

2026-01-10 14:12 www.tipranks.com -

First Question Security Should Ask on AI Projects | CSA | Rich Mogull - LinkedIn

2026-01-10 12:42 www.linkedin.com -

Why Trust Breaks in AI-Powered Security Patrol Robots, and What Operators Miss at 2 A.M.

2026-01-10 10:20 www.floridatoday.com -

The 11 runtime attacks breaking AI security — and how CISOs are stopping them

2026-01-09 18:38 venturebeat.com -

CrowdStrike to buy identity security startup SGNL for $740 million to tackle AI threats

2026-01-08 19:39 finance.yahoo.com -

What happens to insider risk when AI becomes a coworker - Help Net Security

2026-01-08 18:03 www.helpnetsecurity.com -

Google Chrome 143 Security Bypass — 3 Billion Users At Risk - Forbes

2026-01-08 17:48 www.forbes.com -

AI security boom triples valuation of Israeli cyber startup to $9 billion within a year

2026-01-08 17:20 www.timesofisrael.com -

When AI agents interact, risk can emerge without warning - Help Net Security

2026-01-07 18:30 www.helpnetsecurity.com -

Reolink made a local AI hub for its security cameras | The Verge

2026-01-06 20:01 www.theverge.com -

Introducing the Microsoft Defender Experts Suite: Elevate your security with expert-led services

2026-01-06 18:08 www.microsoft.com -

As AI Moves Into Government, National Security Enters A New Era In 2026 - Forbes

2026-01-05 19:48 www.forbes.com -

OTOPIQ launches AI-driven zero-trust OT security firm - SecurityBrief Asia

2026-01-05 19:37 securitybrief.asia -

Cybersecurity leaders' resolutions for 2026 - CSO Online

2026-01-05 19:02 www.csoonline.com -

AI in Higher Education: Protecting Student Data Privacy | EdTech Magazine

2026-01-05 18:20 edtechmagazine.com

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

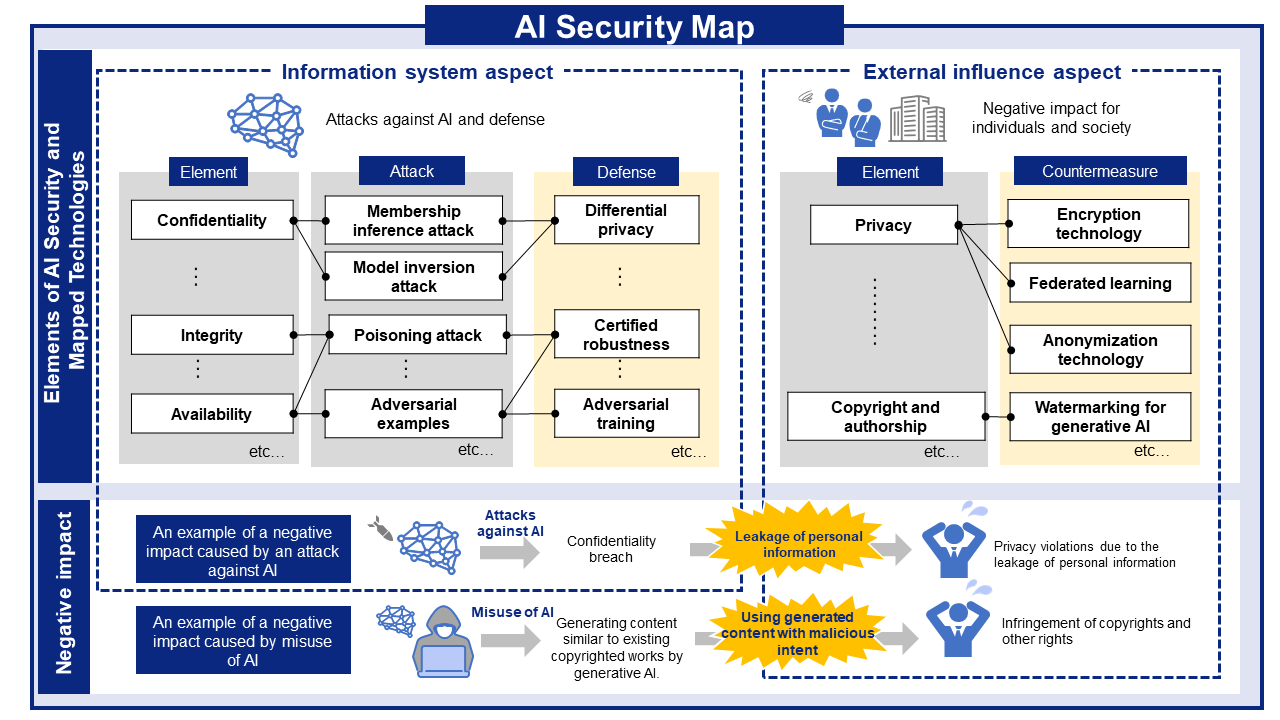

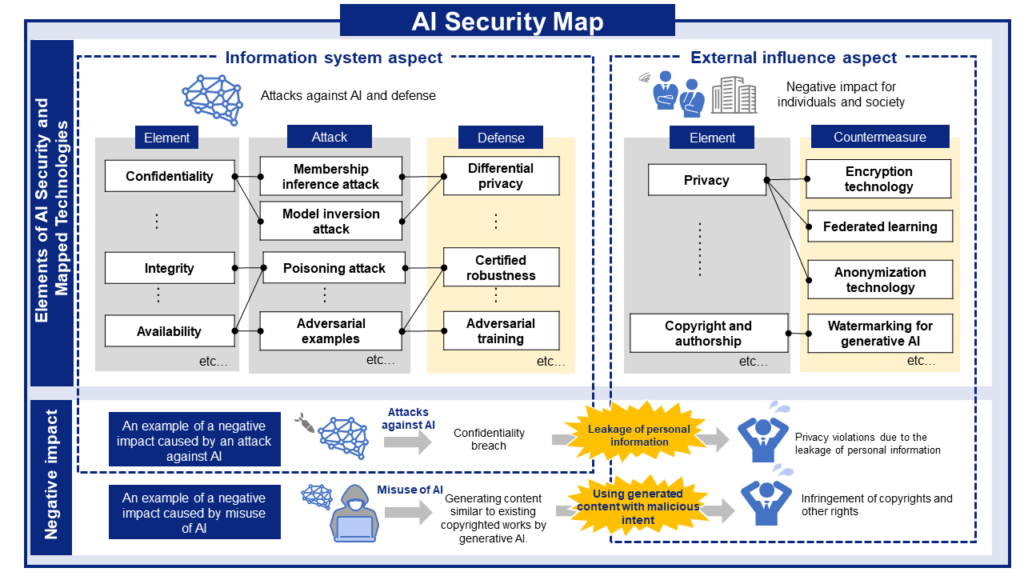

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

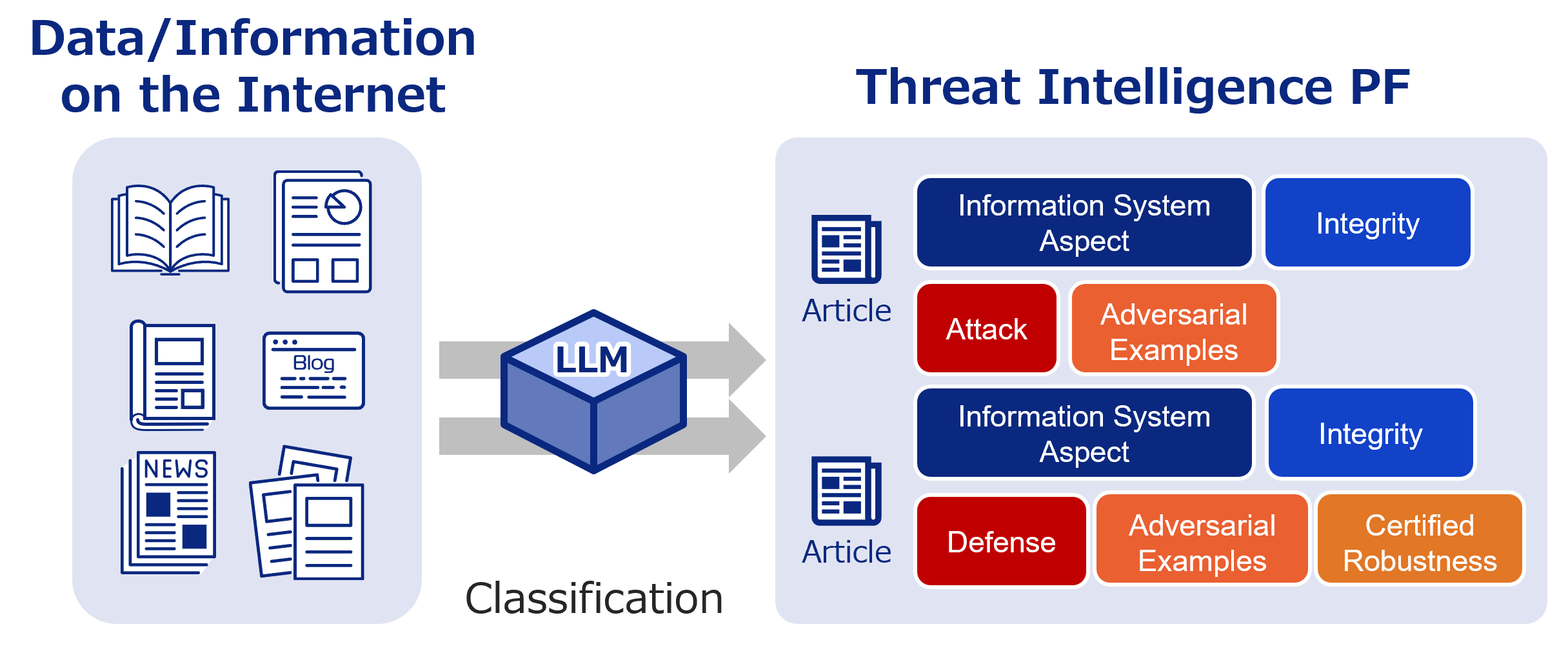

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

Knowledge-to-Data: LLM-Driven Synthesis of Structured Network Traffic for Testbed-Free IDS Evaluation

Authors: Konstantinos E. Kampourakis, Vyron Kampourakis, Efstratios Chatzoglou, Georgios Kambourakis, Stefanos Gritzalis | Published: 2026-01-08

2026.01.082026.01.10

CurricuLLM: Designing Personalized and Workforce-Aligned Cybersecurity Curricula Using Fine-Tuned LLMs

Authors: Arthur Nijdam, Harri Kähkönen, Valtteri Niemi, Paul Stankovski Wagner, Sara Ramezanian | Published: 2026-01-08

2026.01.082026.01.10

Decentralized Privacy-Preserving Federal Learning of Computer Vision Models on Edge Devices

Authors: Damian Harenčák, Lukáš Gajdošech, Martin Madaras | Published: 2026-01-08

2026.01.082026.01.10

-scaled.png)