Site Contents

Related News

Last updated: 2026-01-08 06:01

-

When AI agents interact, risk can emerge without warning - Help Net Security

2026-01-08 03:30 www.helpnetsecurity.com -

Reolink made a local AI hub for its security cameras | The Verge

2026-01-06 20:01 www.theverge.com -

Introducing the Microsoft Defender Experts Suite: Elevate your security with expert-led services

2026-01-06 18:08 www.microsoft.com -

As AI Moves Into Government, National Security Enters A New Era In 2026 - Forbes

2026-01-05 19:48 www.forbes.com -

OTOPIQ launches AI-driven zero-trust OT security firm - SecurityBrief Asia

2026-01-05 19:37 securitybrief.asia -

Cybersecurity leaders' resolutions for 2026 - CSO Online

2026-01-05 19:02 www.csoonline.com -

AI in Higher Education: Protecting Student Data Privacy | EdTech Magazine

2026-01-05 18:20 edtechmagazine.com -

Tech Trends: What States Should Fund With 2026 Cybersecurity Grants

2026-01-05 17:58 statetechmagazine.com -

From SBOM to AI BOM: Rethinking supply chain security for AI native software - SD Times

2026-01-05 17:34 sdtimes.com -

Auto-ISAC, Google partner to boost automotive sector cybersecurity | Google Cloud Blog

2026-01-05 17:20 cloud.google.com -

Navigate the Money Matrix in Our Upcoming Series: Privacy, Security, and AI Explained

2026-01-05 16:37 www.troutman.com -

Navigate the Money Matrix in Our Upcoming Series: Privacy, Security, and AI Explained

2026-01-05 16:19 www.jdsupra.com -

Getting the right security in place for Agentic AI - SmartBrief

2026-01-05 16:05 www.smartbrief.com -

Cyclotron Expands Offshore Hiring to Accelerate Client Success in AI, Azure, Security, and ...

2026-01-05 15:20 techrseries.com -

AI Dominates Cybersecurity Predictions for 2026 - TechNewsWorld

2026-01-05 15:02 www.technewsworld.com -

Grok AI Security Lapse Leads to Generation of Child Abuse Material on X | IBTimes UK

2026-01-04 16:28 www.ibtimes.co.uk -

i-PRO predicts rising demand for edge AI in 2026 - Security Journal Americas

2026-01-01 20:14 securityjournalamericas.com -

Verifiable Credentials Counter AI-Driven Identity Fraud - BankInfoSecurity

2026-01-01 17:18 www.bankinfosecurity.com -

Strengthening Defenses Against AI-Driven Social Engineering - BankInfoSecurity

2026-01-01 17:10 www.bankinfosecurity.com -

Copilot Studio Feature Enables Silent AI Backdoors - eSecurity Planet

2025-12-30 18:59 www.esecurityplanet.com

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

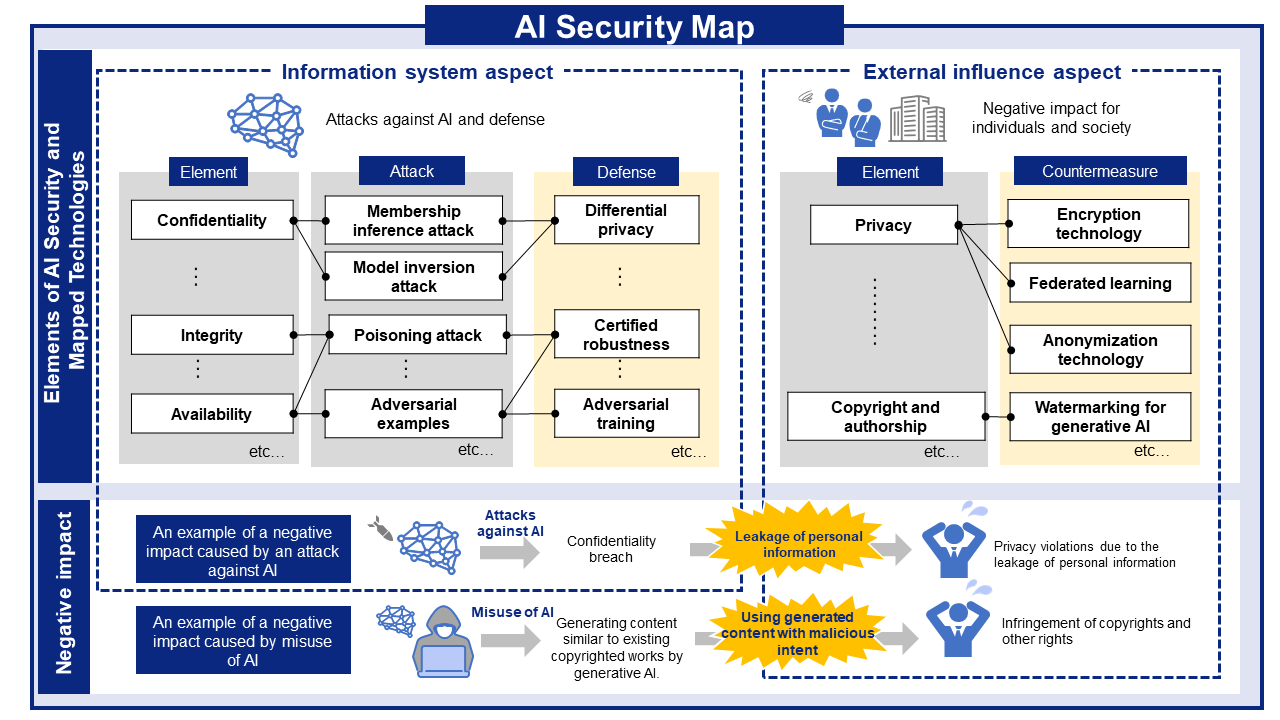

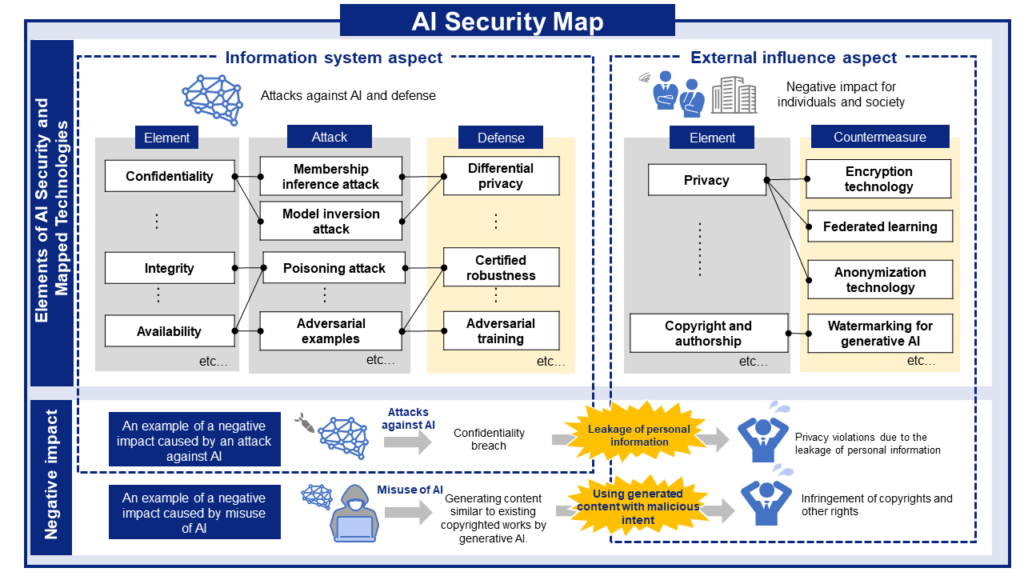

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

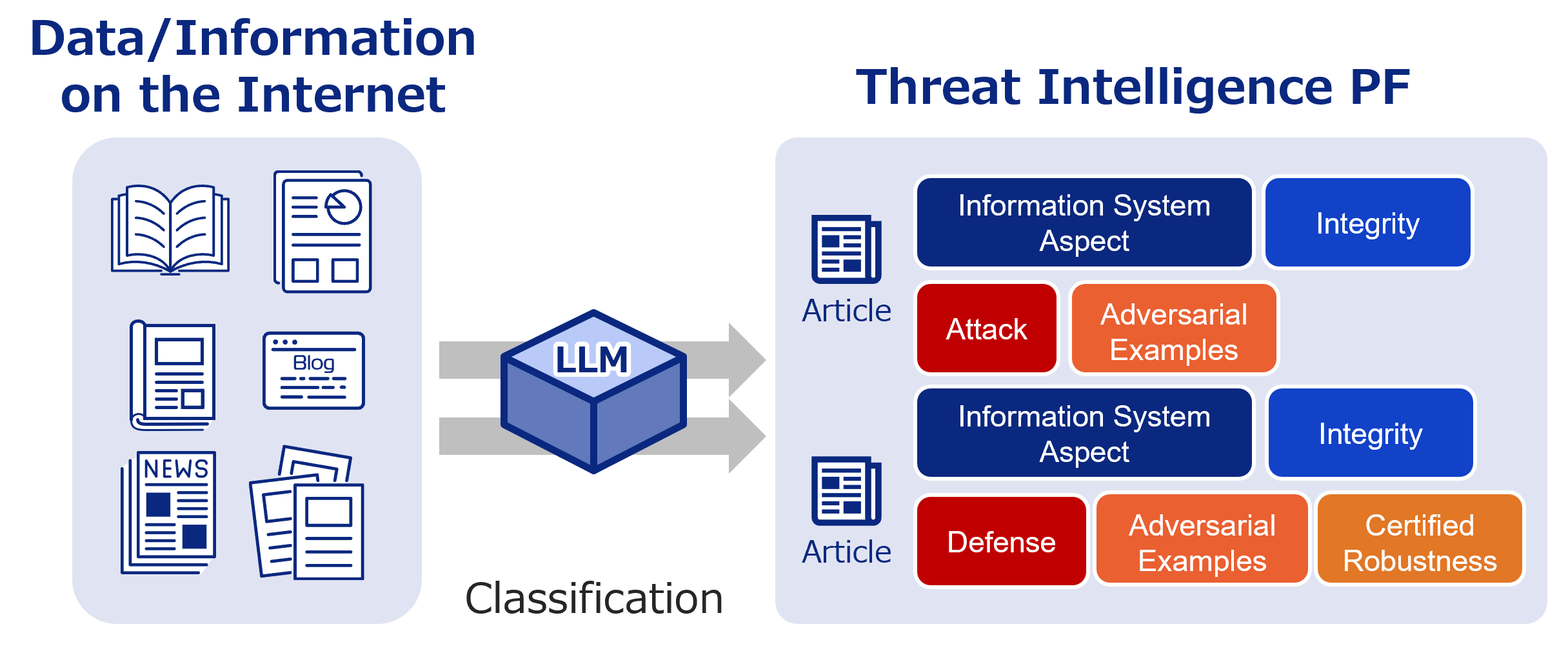

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

SLIM: Stealthy Low-Coverage Black-Box Watermarking via Latent-Space Confusion Zones

Authors: Hengyu Wu, Yang Cao | Published: 2026-01-06

2026.01.062026.01.08

LLMs, You Can Evaluate It! Design of Multi-perspective Report Evaluation for Security Operation Centers

Authors: Hiroyuki Okada, Tatsumi Oba, Naoto Yanai | Published: 2026-01-06

2026.01.062026.01.08

JPU: Bridging Jailbreak Defense and Unlearning via On-Policy Path Rectification

Authors: Xi Wang, Songlei Jian, Shasha Li, Xiaopeng Li, Zhaoye Li, Bin Ji, Baosheng Wang, Jie Yu | Published: 2026-01-06

2026.01.062026.01.08

-scaled.png)