Site Contents

Related News

Last updated: 2026-02-06 06:05

-

How SCSU Is Rebuilding Campus Technology for the AI Era - BankInfoSecurity

2026-02-06 04:31 www.bankinfosecurity.com -

The security implementation gap: Why Microsoft is supporting Operation Winter SHIELD

2026-02-06 03:09 www.microsoft.com -

Cyberhaven Introduces Unified AI and Data Security Platform

2026-02-06 03:02 www.dbta.com -

n8n security woes roll on as new critical flaws bypass December fix - The Register

2026-02-06 02:41 www.theregister.com -

Q&A: Moltbook, OpenClaw, and the security risks of the new agentic-AI era | BetaKit

2026-02-06 02:26 betakit.com -

Salt Security warns autonomous AI agents are the next major security blind spot

2026-02-06 02:06 www.itsecurityguru.org -

AI, hybrid cloud spur security shift to consolidation - SecurityBrief Asia

2026-02-06 02:06 securitybrief.asia -

What Security Teams Need to Know About OpenClaw, the AI Super Agent - CrowdStrike

2026-02-06 01:58 www.crowdstrike.com -

Developing a National Cybersecurity Strategy in the AI Era: A Playbook - Cisco Blogs

2026-02-06 00:48 blogs.cisco.com -

Microsoft Previews Copilot Data Connector for Sentinel to Strengthen AI-Aware Security Monitoring

2026-02-06 00:32 redmondmag.com -

Google's AI partnership with Al Jazeera raises concerns among national security experts

2026-02-05 23:39 jewishinsider.com -

Operant AI Launches Agent Protector: The First Real-Time Agentic Security ... - The Manila Times

2026-02-05 23:10 www.manilatimes.net -

Aramco Partners With CrowdStrike to Advance Cybersecurity in Saudi Arabia

2026-02-05 22:17 www.crowdstrike.com -

AI security worries stall enterprise production deployments | TechTarget

2026-02-04 20:20 www.techtarget.com -

Nvidia AI chip sales to China stalled by US security review, FT reports | Reuters

2026-02-04 19:44 www.reuters.com -

Heartland sheriff's office warns of privacy, security risks with AI-generated photos - KFVS12

2026-02-04 18:55 www.kfvs12.com -

AI and Regulation Redefine Application Security, New Global Study Finds

2026-02-04 18:16 www.itsecurityguru.org -

AI is sharing security evasion tips on forum run by agents - SDxCentral

2026-02-04 18:15 www.sdxcentral.com -

Autonomous attacks ushered cybercrime into AI era in 2025 - Cybersecurity Dive

2026-02-04 18:06 www.cybersecuritydive.com -

Varonis acquires AI TRiSM - Global Security Mag Online

2026-02-04 18:05 www.globalsecuritymag.fr

* This information has been collected using Google Alerts based on keywords set by our website. These data are obtained from third-party websites and content, and we do not have any involvement with or responsibility for their content.

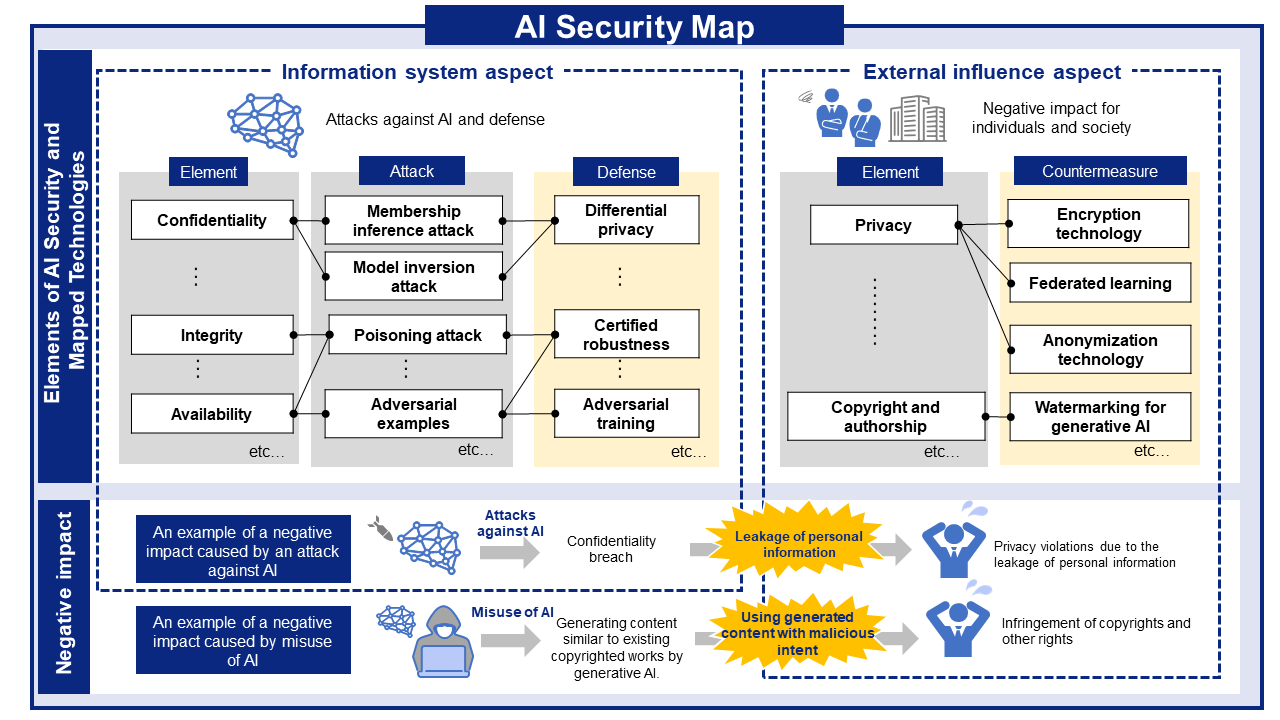

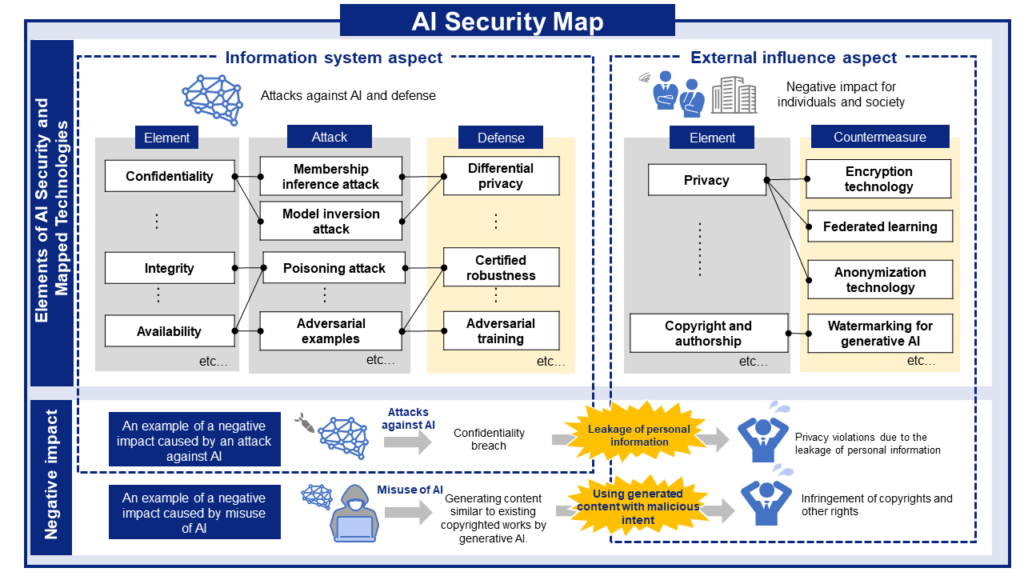

AI Risks, Impacts, and Mitigations at a Glance

AI security threats and related mitigations, organized from two perspectives: information Systems and People and Society.

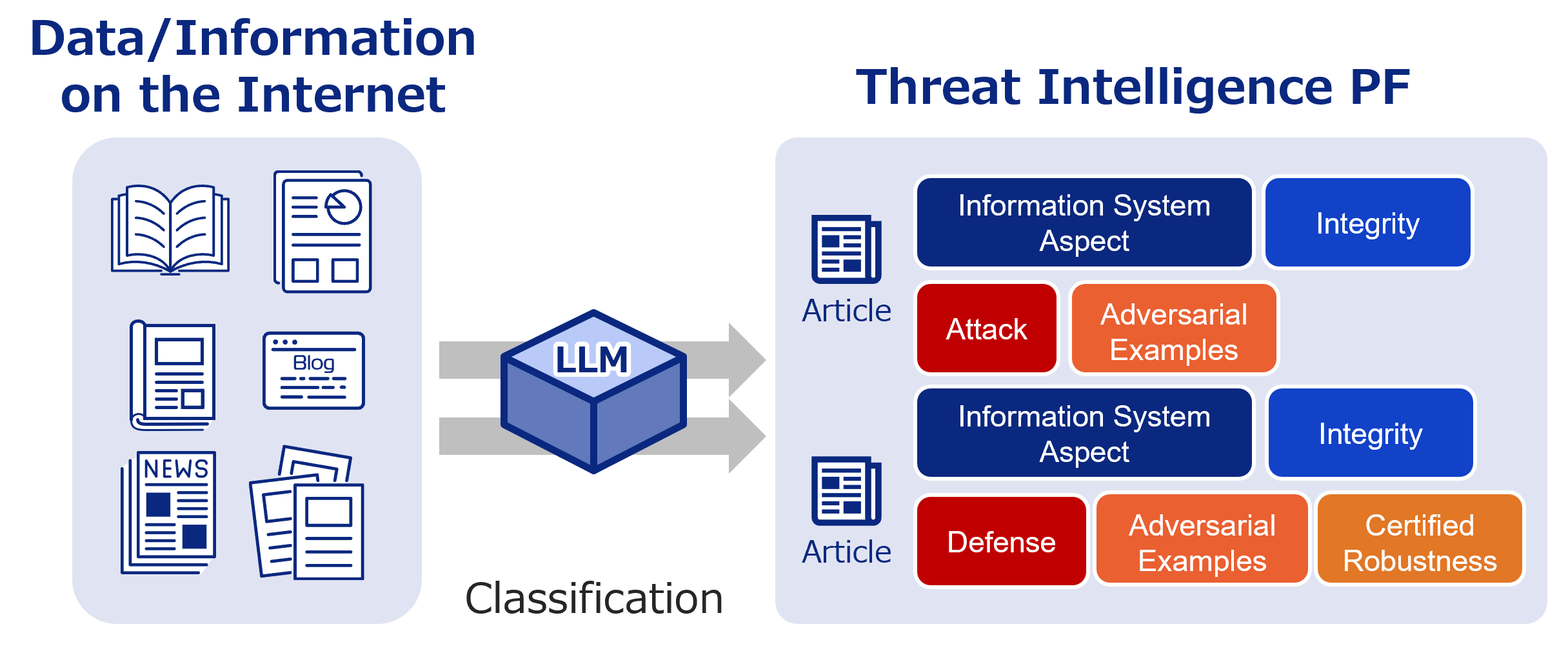

AI Security Information Gathering and Research

Labeled bibliographic information. Use it to research the latest trends and more.

Recent literature

Comparative Insights on Adversarial Machine Learning from Industry and Academia: A User-Study Approach

Authors: Vishruti Kakkad, Paul Chung, Hanan Hibshi, Maverick Woo | Published: 2026-02-04

2026.02.042026.02.06

How Few-shot Demonstrations Affect Prompt-based Defenses Against LLM Jailbreak Attacks

Authors: Yanshu Wang, Shuaishuai Yang, Jingjing He, Tong Yang | Published: 2026-02-04

2026.02.042026.02.06

Semantic Consensus Decoding: Backdoor Defense for Verilog Code Generation

Authors: Guang Yang, Xing Hu, Xiang Chen, Xin Xia | Published: 2026-02-04

2026.02.042026.02.06

-scaled.png)