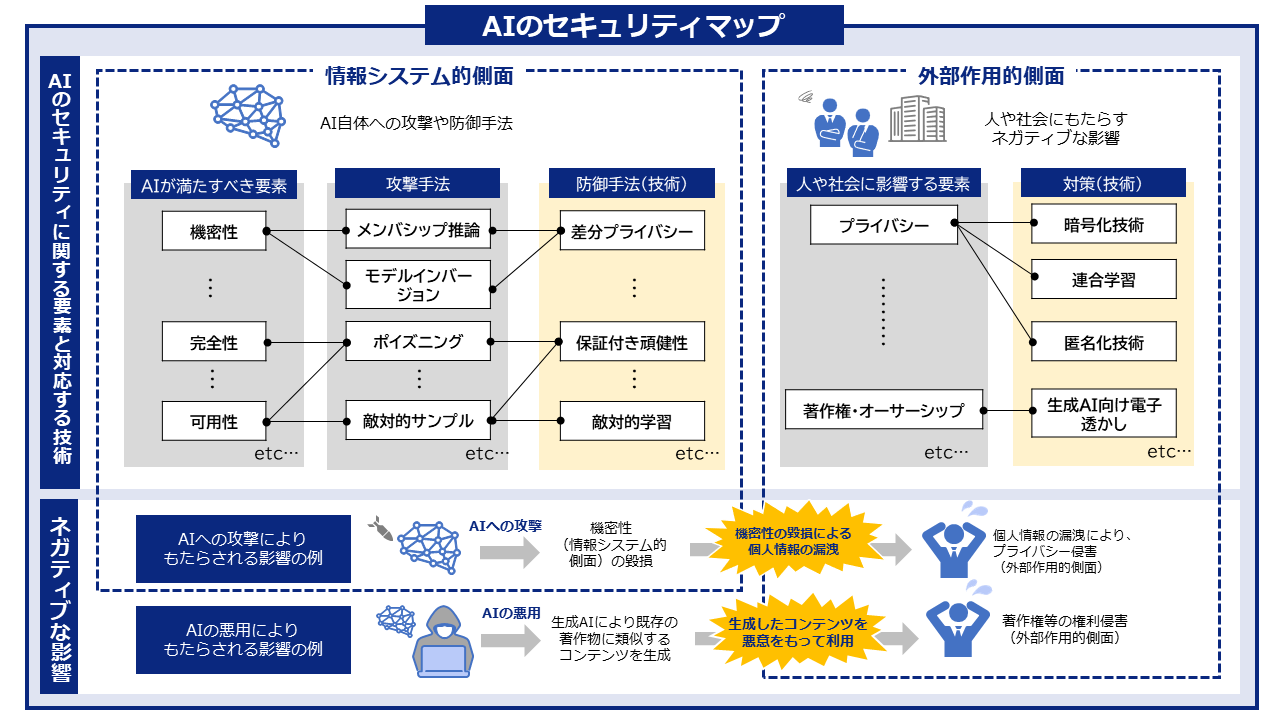

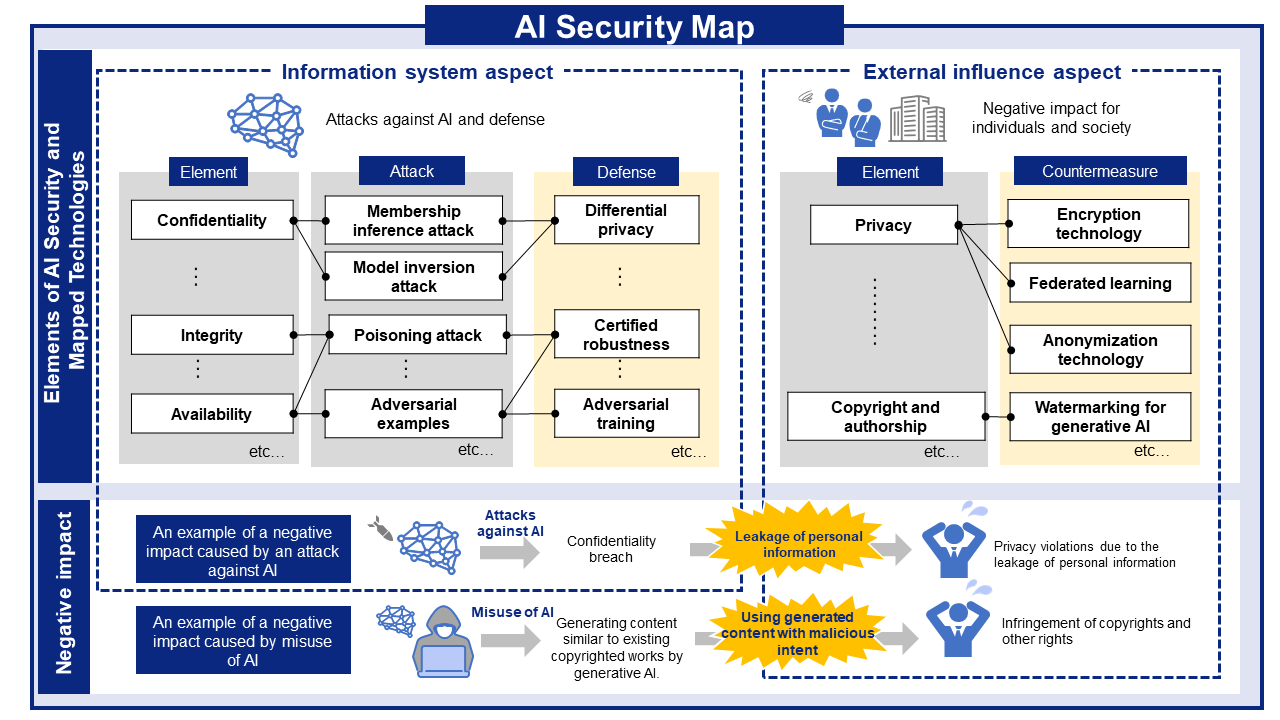

This AI Security Map consists of the elements that AI should fulfill within information systems (information system aspect) and the elements that impact individuals and society as a result of AI being attacked or misused (external influence aspect). This map organizes the negative impacts in these two aspects, the attacks and factors that cause them, as well as the corresponding defense methods and countermeasures. In addition, it systematically examines the relationships between the information system aspect and the external influence aspect, clarifying how the compromise or misuse of AI can affect individuals and society. Figure 1 provides an overview of the AI Security Map. For details, please refer to About AI Security Map.

Figure 1: An overview of AI Security Map

Information system aspect

| Elements that AI should satisfy | Definition and Negative impact |

|---|---|

Confidentiality

|

Definition AI data and models are not accessed by unauthorized individuals. |

Integrity

|

Definition The AI models and algorithms have not been tampered with, and the AI outputs are as expected. |

Availability

|

Definition AI can provide the necessary features and services when needed. Negative impact

|

Explainability

|

Definition AI can explain the basis and process of its output. Negative impact |

Output Fairness

|

Definition AI does not produce biased outputs towards specific individuals or groups. Negative impact |

Safety

|

Definition AI is equipped with safety mechanisms to prevent harm to human life, body, property, or mind. |

Accuracy

|

Definition AI meets a certain level of accuracy for achieving objectives. Negative impact |

Controllability

|

Definition AI is controlled by administrators and does not run amok or affect other environments. Negative impact |

Reliability

|

Definition Output from AI is reliable. Negative impact |

External influence aspect

| Elements that impact individuals and society | Definition and Negative impact |

|---|---|

Cyber attack

|

Definition AI is not used for cyber attacks. Negative impact |

Military use

|

Definition AI is not used for military purposes. Negative impact |

Privacy

|

Definition AI does not infringe on privacy and complies with privacy laws and customs. Negative impact

|

Disinformation

|

Definition AI is not used to intentionally create disinformation, or it can identify such disinformation. Negative impact |

Misinformation

|

Definition AI does not output misinformation, or it can identify such misinformation. Negative impact |

Usability

|

Definition AI meets a certain level of usability to achieve objectives. Negative impact |

Consumer fairness

|

Definition No harm is caused by unfair biased output from AI. |

Plagiarism

|

Definition AI is not used for plagiarism. |

Copyright and authorship

|

Definition AI complies with laws and customs related to copyright and authorship. |

Transparency

|

Definition It is clearly stated that the system uses AI, including information on its limitations and risks associated with its use. Negative impact |

Reputation

|

Definition The AI system provider is evaluated to a certain standard and is trusted. Negative impact |

Compliance with laws and regulations

|

Definition AI is used for lawful purposes and produces output or actions that comply with the law. |

Human-centric principle

|

Definition AI is appropriately used for the benefit of humans. |

Ethics

|

Definition AI behaves in a manner consistent with societal norms. Negative impact |

Economy

|

Definition AI behaves in a manner consistent with societal norms. Negative impact |

Physical impact

|

Definition The use of AI does not cause physical harm to people. |

Psychological impact

|

Definition The use of AI does not cause psychological harm to people. |

Financial impact

|

Definition The use of AI does not cause financial harm to people. |

Medical care

|

Definition The use of AI contributes to the development of advanced and safe medical care. Negative impact |

Critical infrastructure

|

Definition The use of AI contributes to the safe operation of critical infrastructure. Negative impact |

-scaled.png)